tahnik@portfolio:~$ projects

A curated collection of MLOps, AI infrastructure, and open-source projects. From production inference engines to developer tools and cloud-native platforms — built with a focus on performance, scalability, and real-world impact.

Automated SRE with Agentic Workflow and eBPF

AI Engineering

This project brings Site Reliability Engineering into a new era by combining agentic workflows with the power of eBPF. Instead of reactive firefighting, the system acts as an intelligent agent within infrastructure—observing, diagnosing, and remediating issues in real time.

Tech Stack

PyTorch

Kubernetes

FastAPI

TypeScript

Python

CUDA

Ray

vLLM

AWS

Automated SRE with Agentic Workflow and eBPF

AI Engineering

This project brings Site Reliability Engineering into a new era by combining agentic workflows with the power of eBPF. Instead of reactive firefighting, the system acts as an intelligent agent within infrastructure—observing, diagnosing, and remediating issues in real time.

Tech Stack

PyTorch

Kubernetes

FastAPI

TypeScript

Python

CUDA

Ray

vLLM

AWS

Automated SRE with Agentic Workflow and eBPF

AI Engineering

This project brings Site Reliability Engineering into a new era by combining agentic workflows with the power of eBPF. Instead of reactive firefighting, the system acts as an intelligent agent within infrastructure—observing, diagnosing, and remediating issues in real time.

Tech Stack

PyTorch

Kubernetes

FastAPI

TypeScript

Python

CUDA

Ray

vLLM

AWS

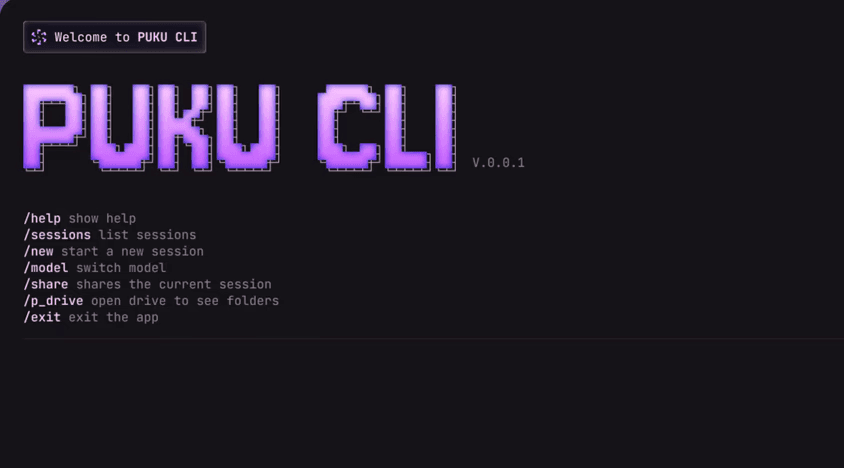

PUKU CLI

AI Engineering

PUKU CLI reimagines the command line as more than just a tool—it becomes a collaborative agent. Designed to bring agentic workflows directly into the terminal, PUKU CLI empowers developers to interact with AI-native processes seamlessly. Instead of juggling scripts, configs, and external dashboards, you can orchestrate tasks, automate flows, and query intelligent agents right where you work. It’s a fusion of traditional CLI efficiency with modern AI-driven adaptability, making the terminal not just a place to execute commands, but a space to think, build, and iterate alongside an intelligent partner.

Tech Stack

TypeScript

Docker

Kubernetes

Python

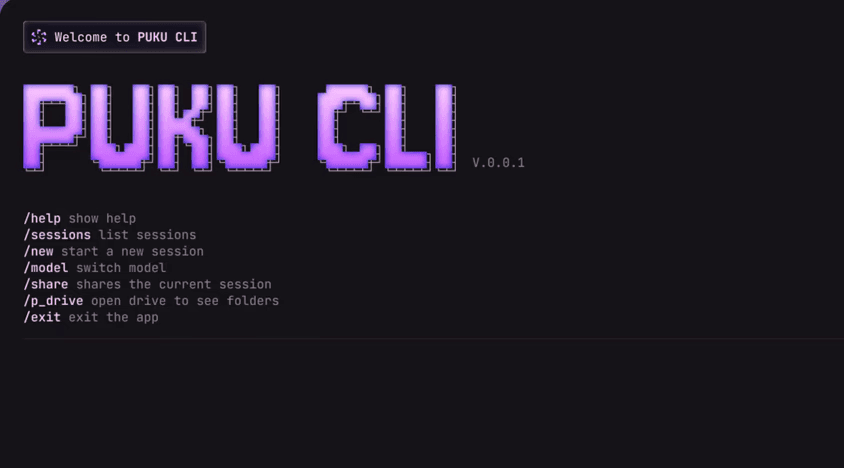

PUKU CLI

AI Engineering

PUKU CLI reimagines the command line as more than just a tool—it becomes a collaborative agent. Designed to bring agentic workflows directly into the terminal, PUKU CLI empowers developers to interact with AI-native processes seamlessly. Instead of juggling scripts, configs, and external dashboards, you can orchestrate tasks, automate flows, and query intelligent agents right where you work. It’s a fusion of traditional CLI efficiency with modern AI-driven adaptability, making the terminal not just a place to execute commands, but a space to think, build, and iterate alongside an intelligent partner.

Tech Stack

TypeScript

Docker

Kubernetes

Python

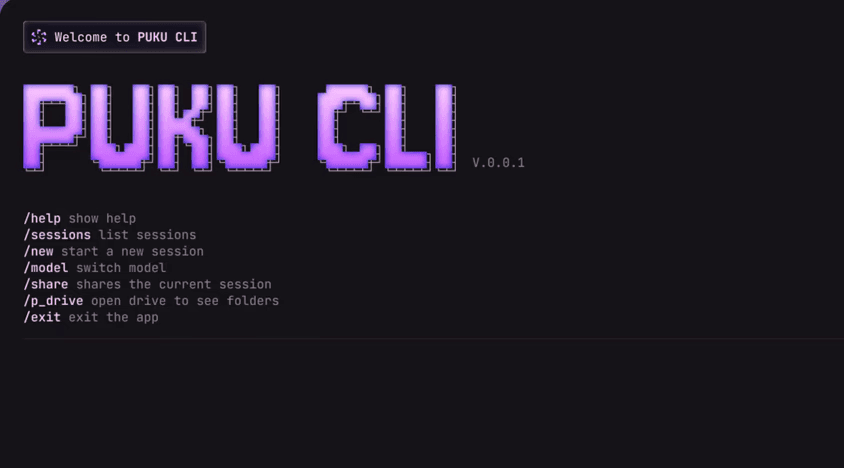

PUKU CLI

AI Engineering

PUKU CLI reimagines the command line as more than just a tool—it becomes a collaborative agent. Designed to bring agentic workflows directly into the terminal, PUKU CLI empowers developers to interact with AI-native processes seamlessly. Instead of juggling scripts, configs, and external dashboards, you can orchestrate tasks, automate flows, and query intelligent agents right where you work. It’s a fusion of traditional CLI efficiency with modern AI-driven adaptability, making the terminal not just a place to execute commands, but a space to think, build, and iterate alongside an intelligent partner.

Tech Stack

TypeScript

Docker

Kubernetes

Python

PUKU Editor

AI Engineering

PUKU Editor is an AI-powered fork of VS Code that accelerates coding with intelligent predictions, semantic understanding, and context-aware suggestions, guiding Poridhi.io learners in vibe and platform engineering.

Tech Stack

PUKU Editor

AI Engineering

PUKU Editor is an AI-powered fork of VS Code that accelerates coding with intelligent predictions, semantic understanding, and context-aware suggestions, guiding Poridhi.io learners in vibe and platform engineering.

Tech Stack

PUKU Editor

AI Engineering

PUKU Editor is an AI-powered fork of VS Code that accelerates coding with intelligent predictions, semantic understanding, and context-aware suggestions, guiding Poridhi.io learners in vibe and platform engineering.

Tech Stack

Poridhi AI Studio

AI Engineering

Cloud-native AI development platform (ai.poridhi.io) enabling developers to train, fine-tune, and deploy models with GPU-accelerated workspaces, integrated notebooks, and one-click model serving.

Tech Stack

Python

Kubernetes

Docker

AWS

Poridhi AI Studio

AI Engineering

Cloud-native AI development platform (ai.poridhi.io) enabling developers to train, fine-tune, and deploy models with GPU-accelerated workspaces, integrated notebooks, and one-click model serving.

Tech Stack

Python

Kubernetes

Docker

AWS

Poridhi AI Studio

AI Engineering

Cloud-native AI development platform (ai.poridhi.io) enabling developers to train, fine-tune, and deploy models with GPU-accelerated workspaces, integrated notebooks, and one-click model serving.

Tech Stack

Python

Kubernetes

Docker

AWS

MicroVMs for AI Agents

MLOps

Lightweight microVM sandbox environments for AI agents. Provides isolated, secure execution environments with minimal overhead — enabling agents to run code, access filesystems, and interact with tools safely.

Tech Stack

MicroVMs for AI Agents

MLOps

Lightweight microVM sandbox environments for AI agents. Provides isolated, secure execution environments with minimal overhead — enabling agents to run code, access filesystems, and interact with tools safely.

Tech Stack

MicroVMs for AI Agents

MLOps

Lightweight microVM sandbox environments for AI agents. Provides isolated, secure execution environments with minimal overhead — enabling agents to run code, access filesystems, and interact with tools safely.

Tech Stack

Minimal Scale Inference Engine

MLOps

Open-source minimal inference serving engine. Strips complexity to expose core concepts of LLM serving — continuous batching, KV-cache management, and efficient GPU scheduling for educational and production use.

Tech Stack

Minimal Scale Inference Engine

MLOps

Open-source minimal inference serving engine. Strips complexity to expose core concepts of LLM serving — continuous batching, KV-cache management, and efficient GPU scheduling for educational and production use.

Tech Stack

Minimal Scale Inference Engine

MLOps

Open-source minimal inference serving engine. Strips complexity to expose core concepts of LLM serving — continuous batching, KV-cache management, and efficient GPU scheduling for educational and production use.

Tech Stack

Efficient Indexing Engine

MLOps

High-performance context indexing and retrieval engine for large codebases. Implements efficient chunking strategies, semantic search, and intelligent context window management for AI-assisted development workflows.

Tech Stack

Efficient Indexing Engine

MLOps

High-performance context indexing and retrieval engine for large codebases. Implements efficient chunking strategies, semantic search, and intelligent context window management for AI-assisted development workflows.

Tech Stack

Efficient Indexing Engine

MLOps

High-performance context indexing and retrieval engine for large codebases. Implements efficient chunking strategies, semantic search, and intelligent context window management for AI-assisted development workflows.

Tech Stack